Memory-Efficient Fine-Tuning of Compressed Large Language Models via sub-4-bit Integer Quantization

Memory-Efficient Fine-Tuning of Compressed Large Language Models via sub-4-bit Integer Quantization

[TL;DR]

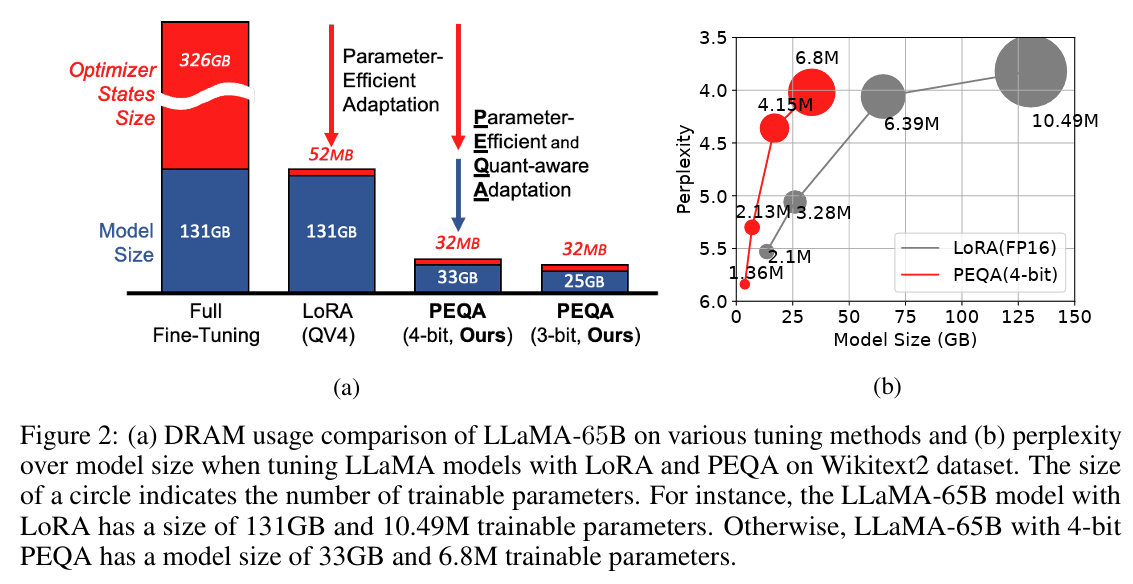

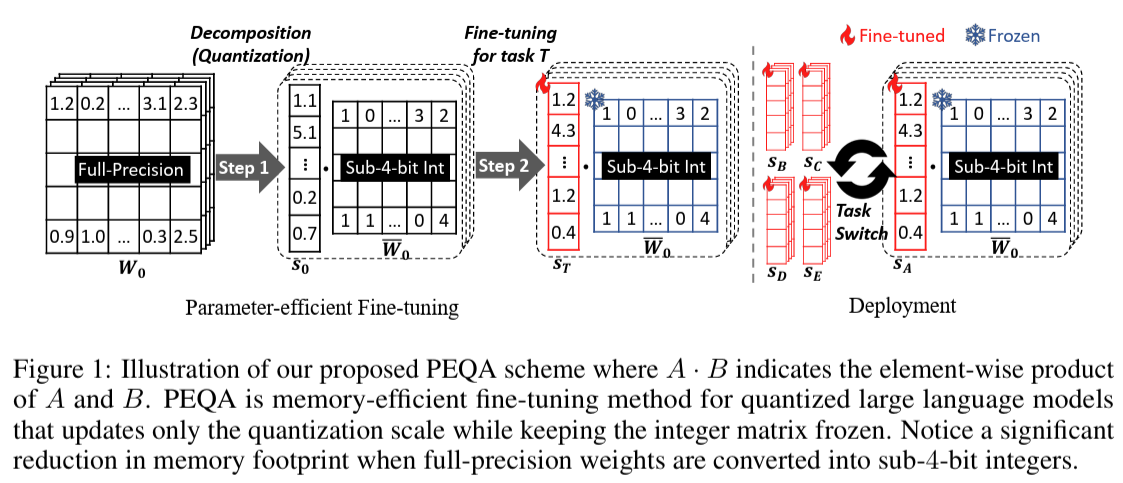

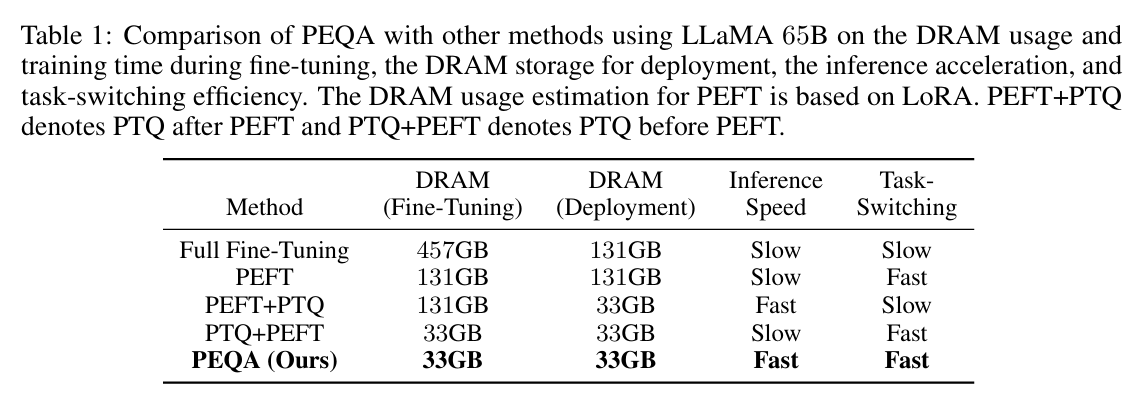

PEQA is a novel fine-tuning approach that integrates Parameter-Efficient Fine-Tuning (PEFT) with weight-only quantized LLMs by updating only the quantization scales, preserving low-bit integer weight matrices. This results in huge memory savings, seamless task adaptation, and inference acceleration.

Highlights

- Integrates PEFT with quantized LLMs, updating only the quantization scales while keeping integer matrices frozen.

- Reduces memory consumption during fine-tuning and deployment, making LLM adaptation feasible even for resource-constrained settings.

- Maintains quantization benefits post fine-tuning, ensuring accelerated inference.

- Demonstrates resilience in performance recovery, even for sub-4-bit quantized models, on large-scale instruction datasets.

- Scales up to 65B parameter models while achieving performance close to full-precision LoRA fine-tuning.

Summary

- Problem Statement: LLM fine-tuning is memory-intensive, even with PEFT such as LoRA, as full-precision weights remain a bottleneck. Quantization can reduce memory but is typically applied post-training, which limits the adaptability.

- Solution: PEQA bridges this gap by fine-tuning only the quantization scales of a pre-quantized LLM while keeping the integer weights frozen. This enables task-specific adaptation with minimal overhead.

- PEQA Framework:

- Step 1 Decomposition: Pre-trained model weights are quantized into sub-4-bit integers with associated scaling factors.

- Step 2 Fine-tuning: Only the quantization scales are updated while maintaining the frozen integer matrix, significantly reducing learnable parameters.

Key Advantages

Memory Efficiency

- Fine-tunes only the quantization scales, significantly reducing memory overhead.

- Optimized for low-bit integer quantization (≤ 4-bit) while maintaining high accuracy.

Seamless Task Switching

- PEQA enables quick and efficient adaptation across different tasks by swapping quantization scales instead of retraining entire models.

Faster Inference

- The frozen integer matrix remains intact, ensuring post-fine-tuning speedup using quantized inference kernels.

Experiments

Memory and General Comparison

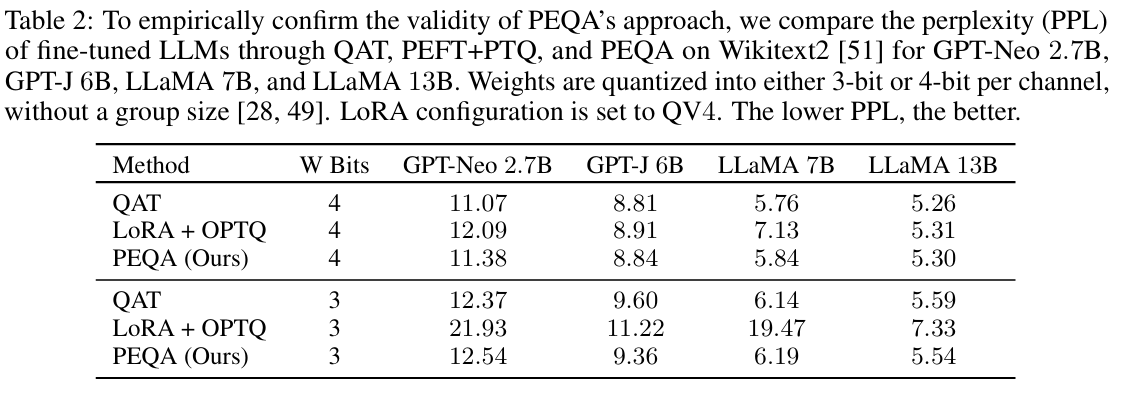

PEQA vs. QAT vs. PEFT+PTQ

- PEQA achieves performance close to QAT, significantly outperforming LoRA + PTQ at 3-bit and 4-bit precision.

- Lower perplexity indicates effective fine-tuning of quantized models without sacrificing accuracy.

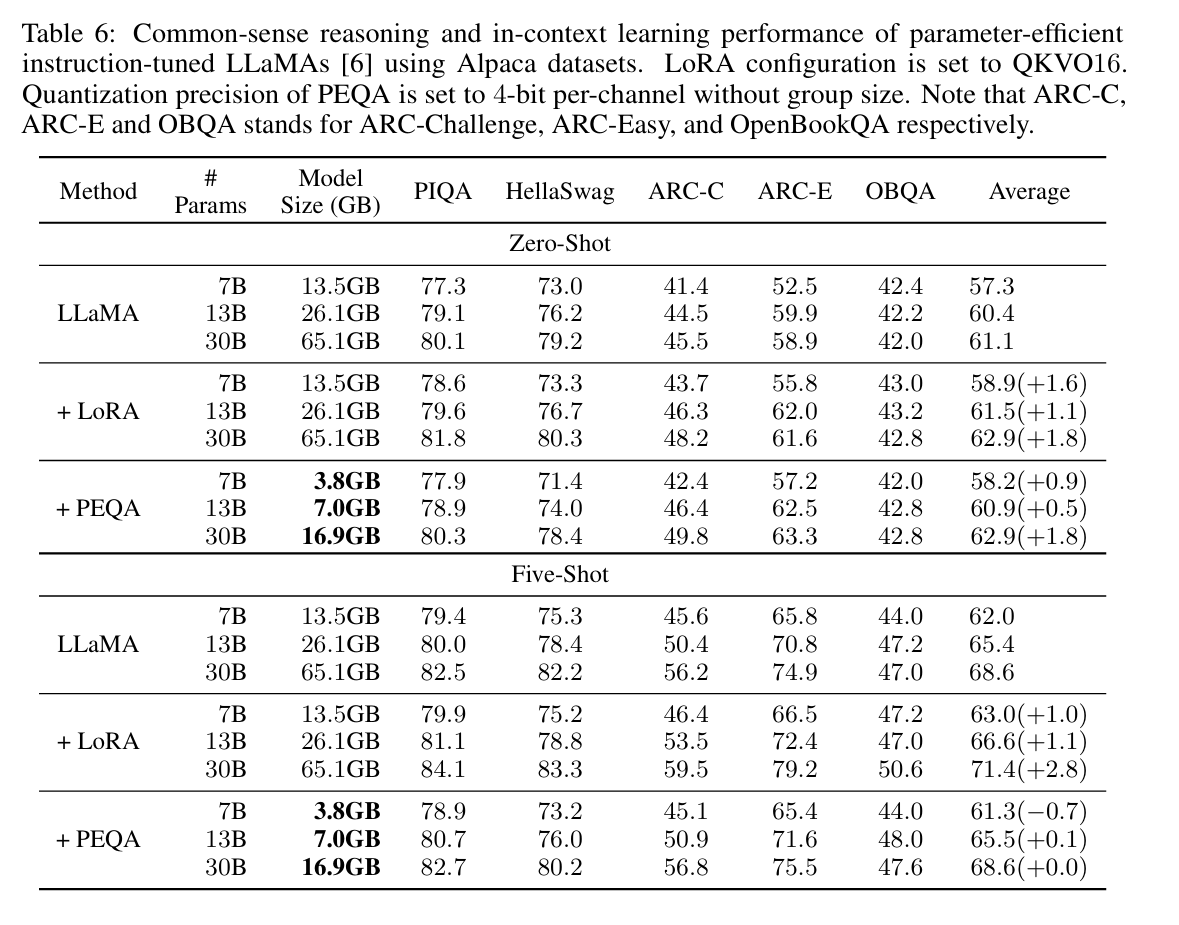

Instruction-Tuning with Alpaca Dataset

- Evaluated on common-sense reasoning and in-context learning tasks (ARC, PIQA, HellaSwag).

- Performance comparable to LoRA, with additional memory savings and inference acceleration.

Notations

Quantized Weights and Fine-Tuning

- Weight-only asymmetric quantization:

Given a fully-connected layer \(\mathbf{W}_0 \in \mathbb{R}^{n \times m}\), a given bit-width \(b\), per-channel scales and zero-points \(\mathbf{s}_0, \mathbf{z}_0 \in \mathbb{R}^{n \times 1}\), asymmetric quantized pre-trained weights \(\widehat{\mathbf{W}}_0\) can be written as

- PEQA fine-tuning modifies only the quantization scale by:

\(\widehat{\mathbf{W}} = (\mathbf{s}_0 + \Delta s) \cdot \overline{\mathbf{W}}_0 = (\mathbf{s}_0 + \Delta s) \cdot \left( \text{clamp} \left( \left\lfloor \frac{\mathbf{W}_0}{\mathbf{s}_0} \right\rfloor + \mathbf{z}_0, 0, 2^b - 1 \right) - \mathbf{z}_0 \right)\) where \(\overline{\mathbf{W}}_0\) is frozen, and \(\Delta \mathbf{s} \in \mathbb{R}^{n \times 1}\) represents the gradient update of \(\mathbf{s}_0\) obtained by adaptation to a downstream task.

Conclusion

PEQA presents a memory-efficient fine-tuning approach for quantized LLMs (weight-only quantization). By updating only the quantization scales while keeping integer matrices fixed, PEQA achieves:

- Comparable accuracy to full-precision PEFT methods

- Significant memory savings (up to 4× reduction)

- Seamless adaptation to new tasks

- Faster inference without additional post-processing

PEQA enables scalable and efficient model adaptation for large-scale language models, ensuring practical deployment on memory-constrained devices.