A Unified Point-Based Framework for 3D Segmentation

3DV 2019

![]() Top performing method in 2019

Top performing method in 2019

Motivations

- Normal describes object shapes

- Color shows object textures

- Global scene prior is crucial for semantics, for example, discriminating curtain in living rooms and shower curtain in restrooms

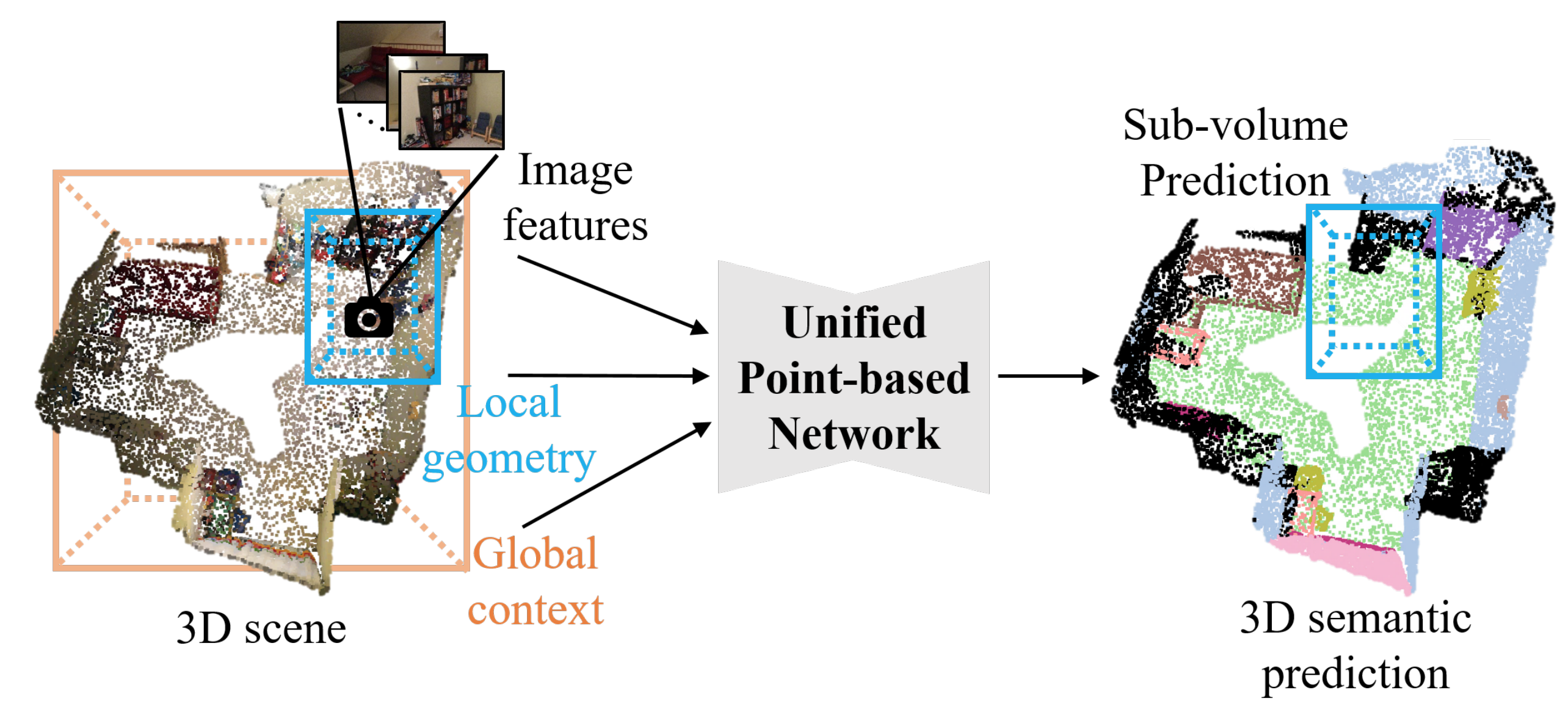

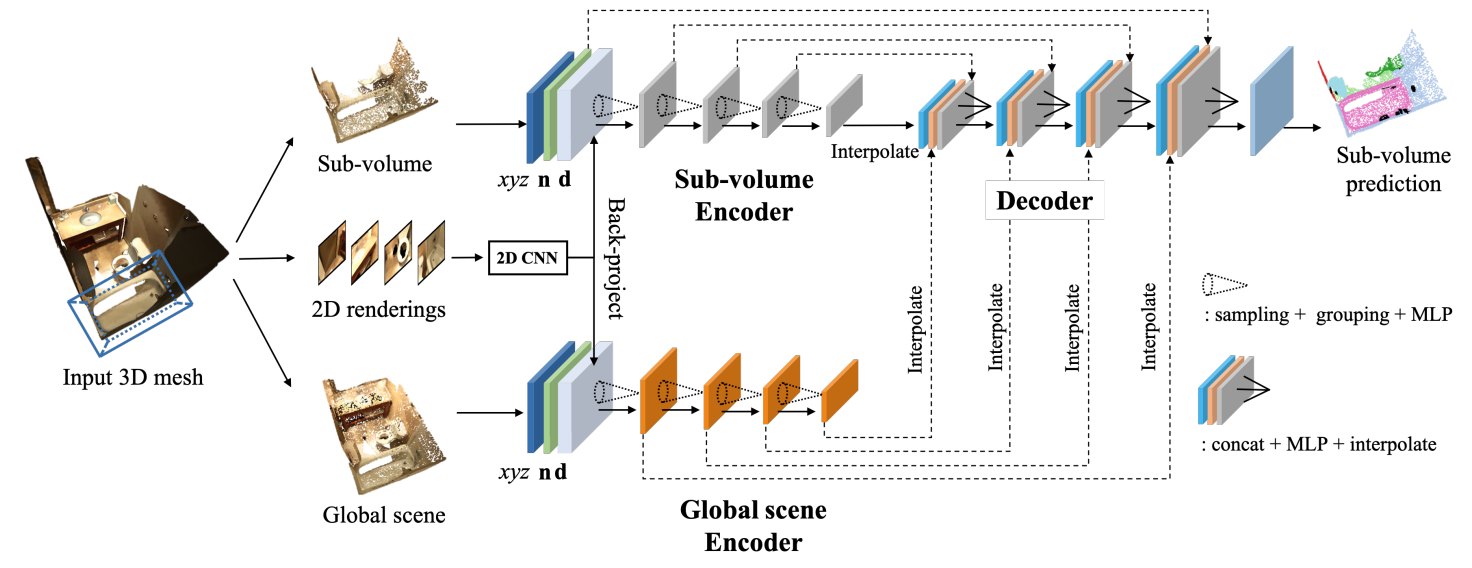

Method Overview

We extract image features by applying a 2D segmentation network and backproject the 2D image features into 3D space. Both 2D image features and 3D point features are concatenated in the same point coordinates. The sub-volume and scene encoder extract the features for local details and global context priors respectively. The decoder fuses the local and global features and generate the semantic predictions for each point in the sub-volume.

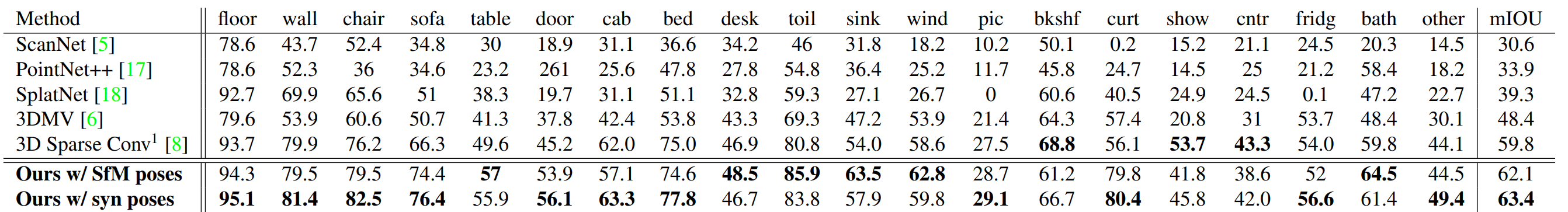

Main Results

Results on ScanNet testing set. Our unified framework outperforms several state-of-the-art methods by a large margin. The gain comes from jointly optimizing 2D image features, 3D structures and global context in a point-based architecture. With synthetic camera poses, we can further improve our performance (+1.3% gains)

Presentation

Demo

Citation

@inproceedings{chiang2019unified,

title = {A unified point-based framework for 3d segmentation},

author = {Chiang, Hung-Yueh and Lin, Yen-Liang and Liu, Yueh-Cheng and Hsu, Winston H},

booktitle = {International Conference on 3D Vision (3DV)},

pages = {155--163},

year = {2019},

organization = {IEEE},

}

Acknowledgements

This work was supported in part by the Ministry of Science and Technology, Taiwan, under Grant MOST 108-2634-F-002-004 and FIH Mobile Limited. We also benefit from the NVIDIA grants and the DGX-1 AI Supercomputer.