MobileTL: On-device Transfer Learning with Inverted Residual Blocks

AAAI 2023 Oral Paper

[slides] [poster] [demo]

![]() 1.5 × less FLOPS

1.5 × less FLOPS ![]() 2× memory reduction for Inverted Residual Blocks

2× memory reduction for Inverted Residual Blocks

Motivations

- IRBs are widely used in mobile-friendly models

- IRBs are designed for inference efficiently on edge devices

- Training IRB-based models on edge devices is challenging

- IRBs require 3× more activation storage for backward pass

Method

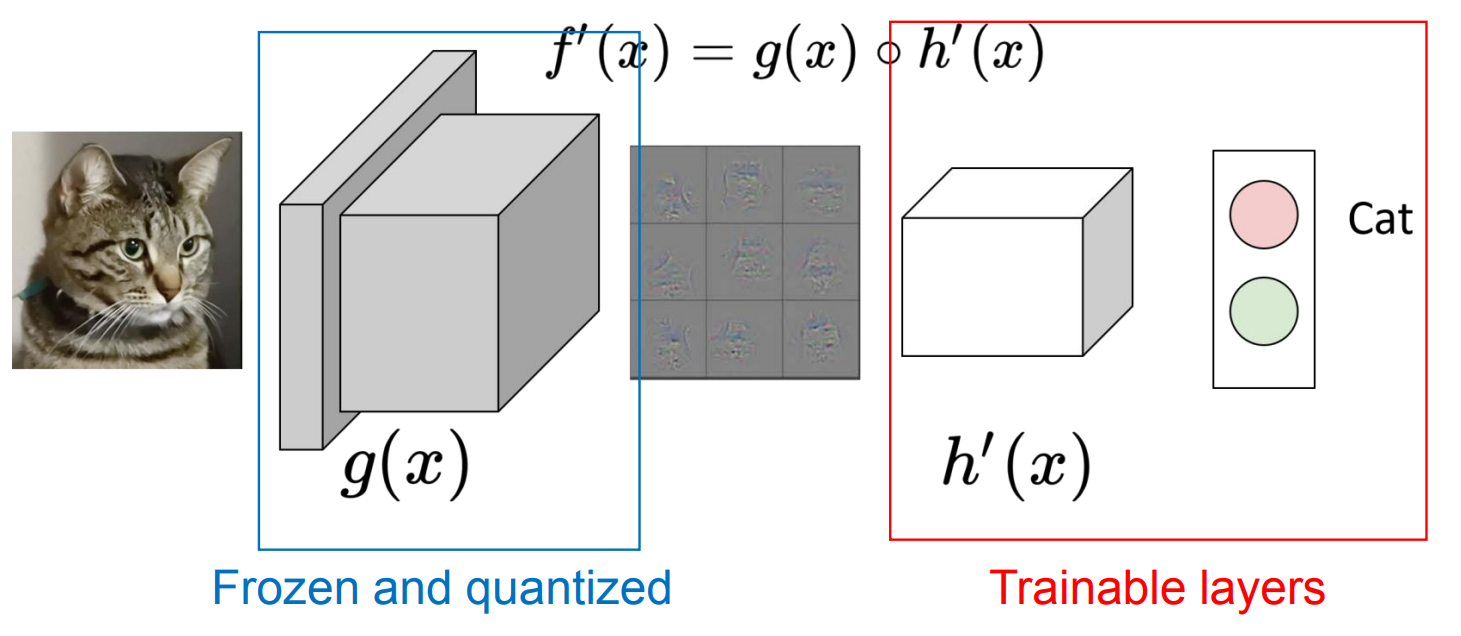

- Freeze low-level layers and fine-tune high-level layers

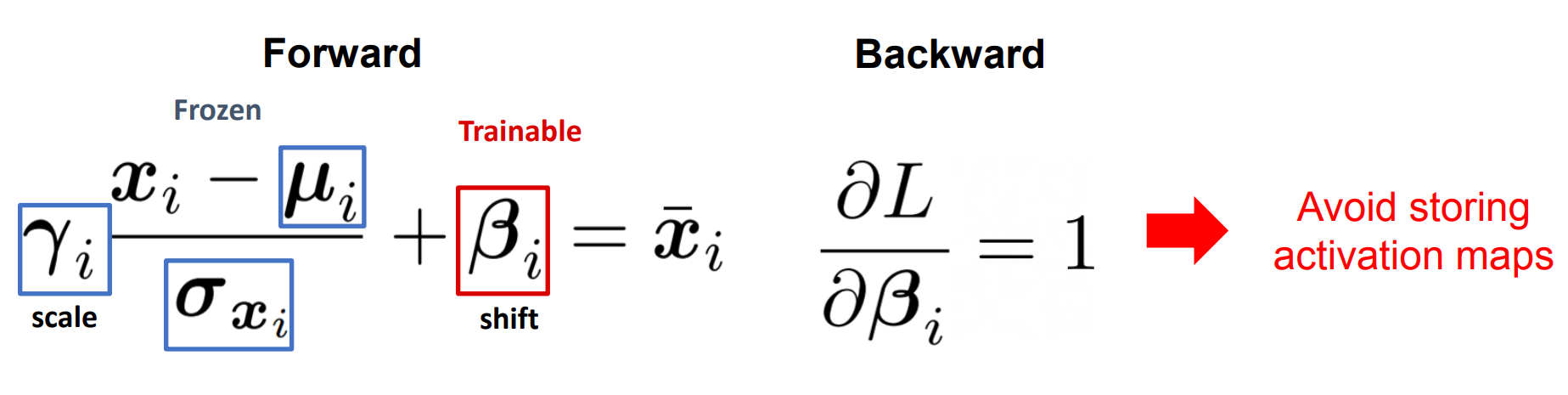

- Freeze global statistics and update bias only for intermediate normalization layers

- Approximate activation layer’s backward as a signed function

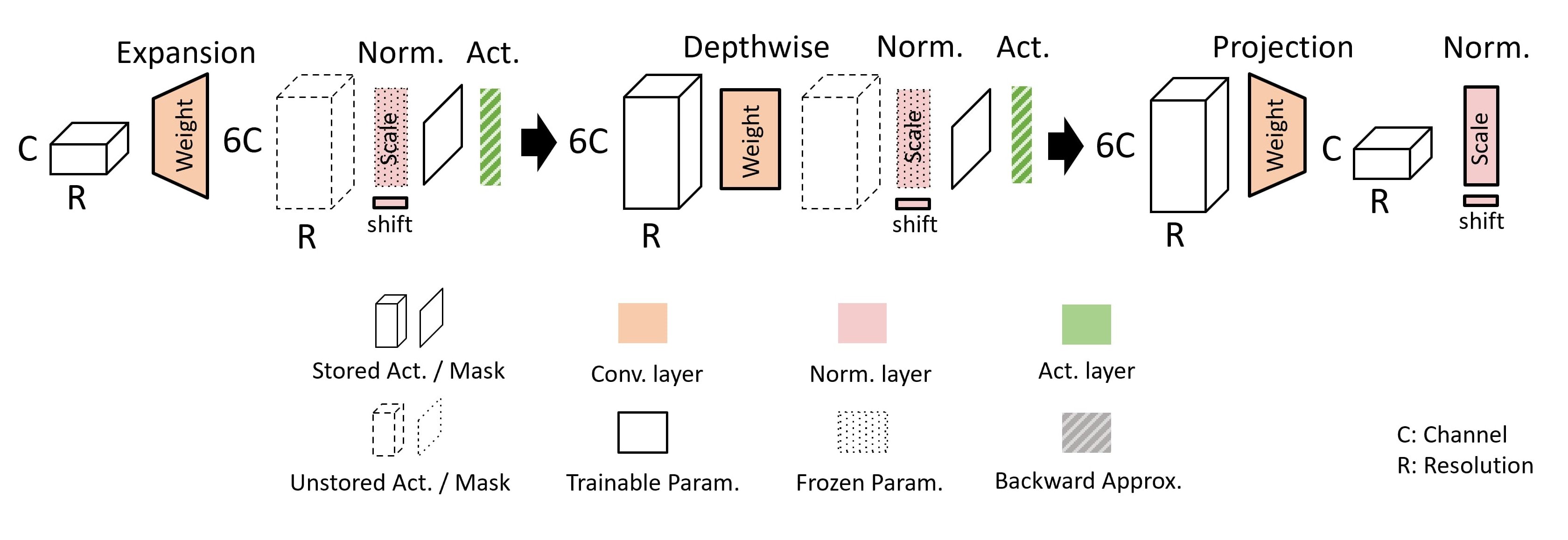

Overview of a MobileTL Block

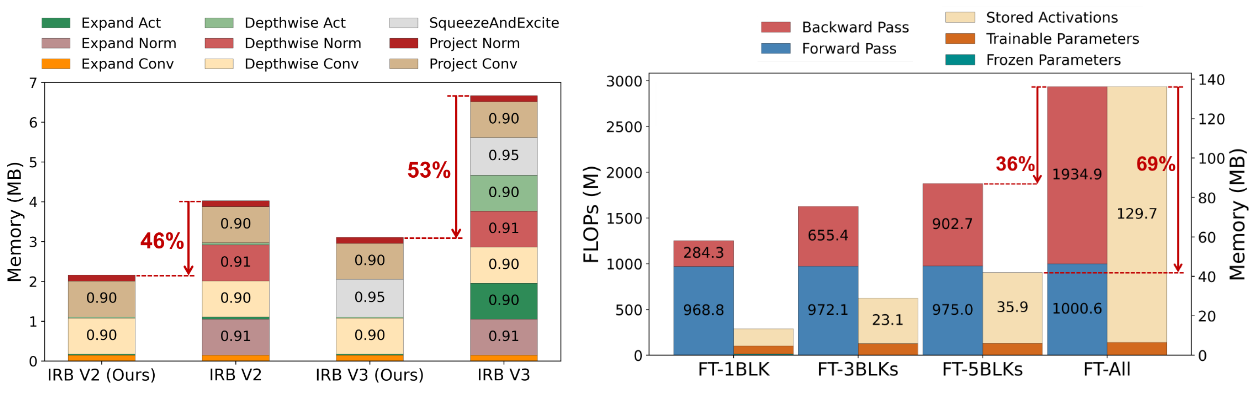

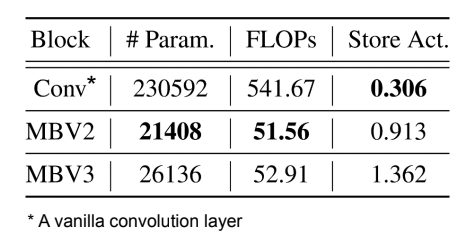

MobileTL is an efficient training scheme for IRBs. To avoid storing activation maps for two normalization layers after expansion and depthwise convolution, we only train shifts, and freeze scales and global statistics. The weights in convolutional layers are trained as usual. To adapt the distribution to the target dataset, we update the scale, shift, and global statistics for the last normalization layer in the block. MobileTL approximates the backward function of activation layers, e.g., ReLU6 and Hard-Swish, by a signed function, so only a binary mask is stored for activation backward computing. Our method reduces the memory consumption by 46.3% and 53.3% for MobileNetV2 and V3 IRBs.

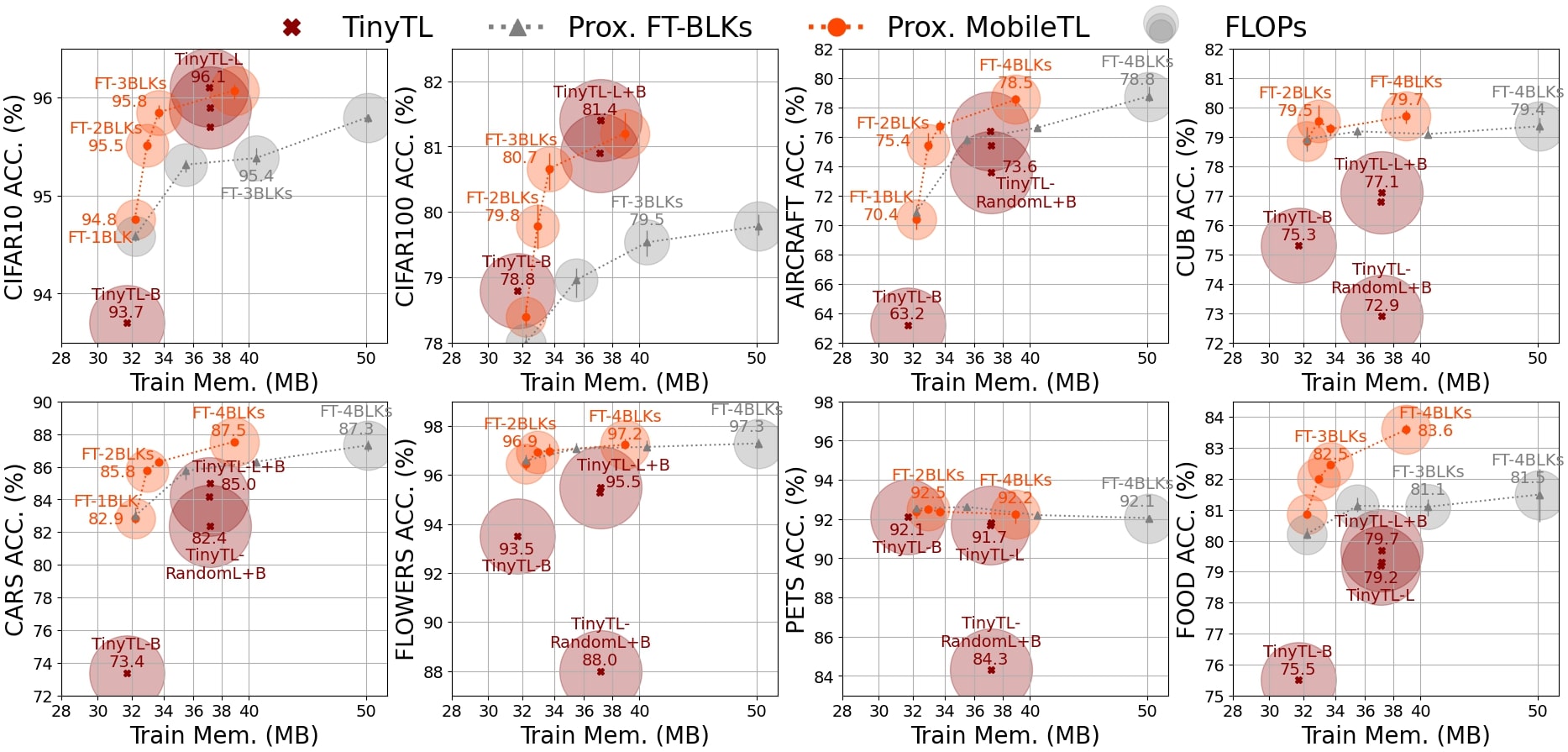

Main Results

We experiment MobileTL with Proxyless Mobile (Cai, Zhu, and Han 2019) and transfer to eight downstream tasks. We compare our method (Prox. MobileTL in orange) with vanilla fine-tuning a few IRBs of the model (Prox. FT-BLKs in grey) and TinyTL (Cai et al. 2020). MobileTL maintains Pareto optimality for all datasets and improves accuracy over TinyTL in six out of eight datasets.

Demo

Citation

@inproceedings{chiang2022mobiletl,

title = {MobileTL: On-device Transfer Learning with Inverted Residual Blocks},

author = {Chiang, Hung-Yueh and Frumkin, Natalia and Liang, Feng and Marculescu, Diana},

booktitle = {Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)},

year = {2023},

}

Acknowledgements

This research was supported in part by NSF CCF Grant No. 2107085, NSF CSR Grant No. 1815780, and the UT Cockrell School of Engineering Doctoral Fellowship. Additionally, we thank Po-han Li for his help with the proof.