UniQL: Unified Quantization and Low-rank Compression for Adaptive Edge LLMs

ICLR 2026

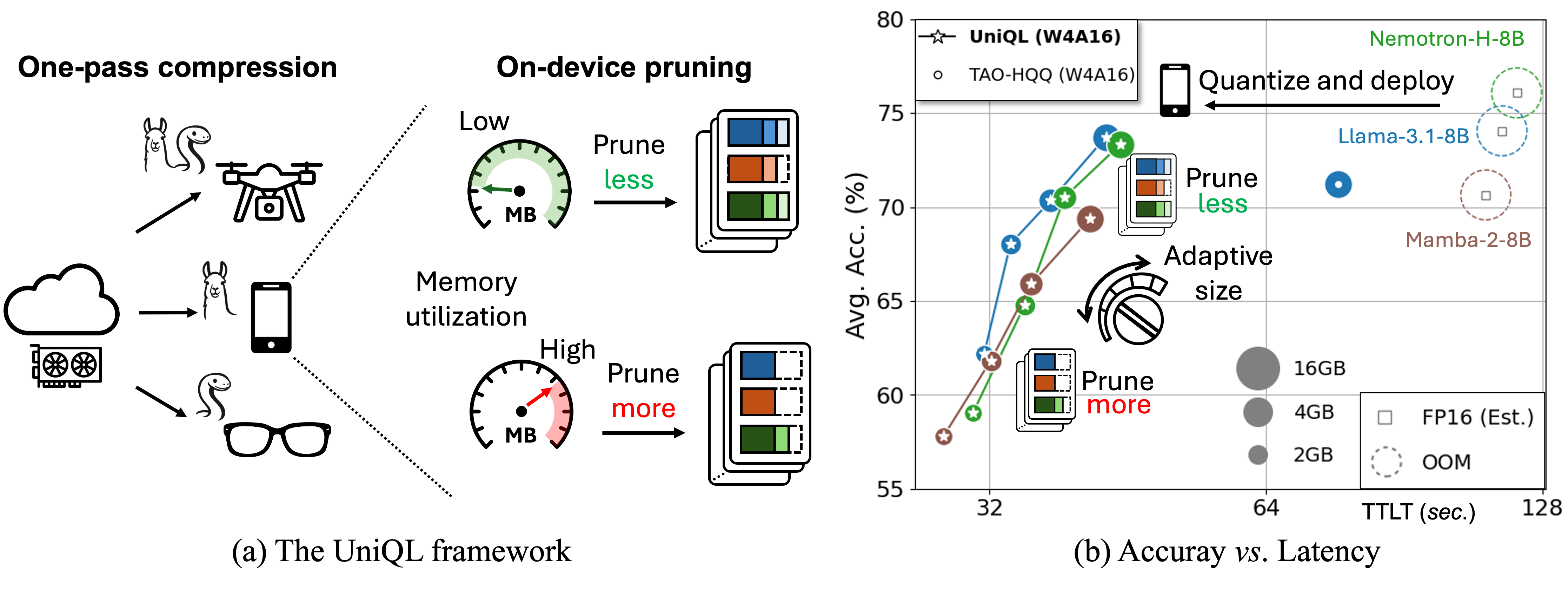

📚 Unified support Transformers, SSMs, and hybrid models

🔗 One-pass framework for quantization + structured low-rank pruning

⚡ 2.7×–3.4× latency speedups, 4×–5.7× memory reductions

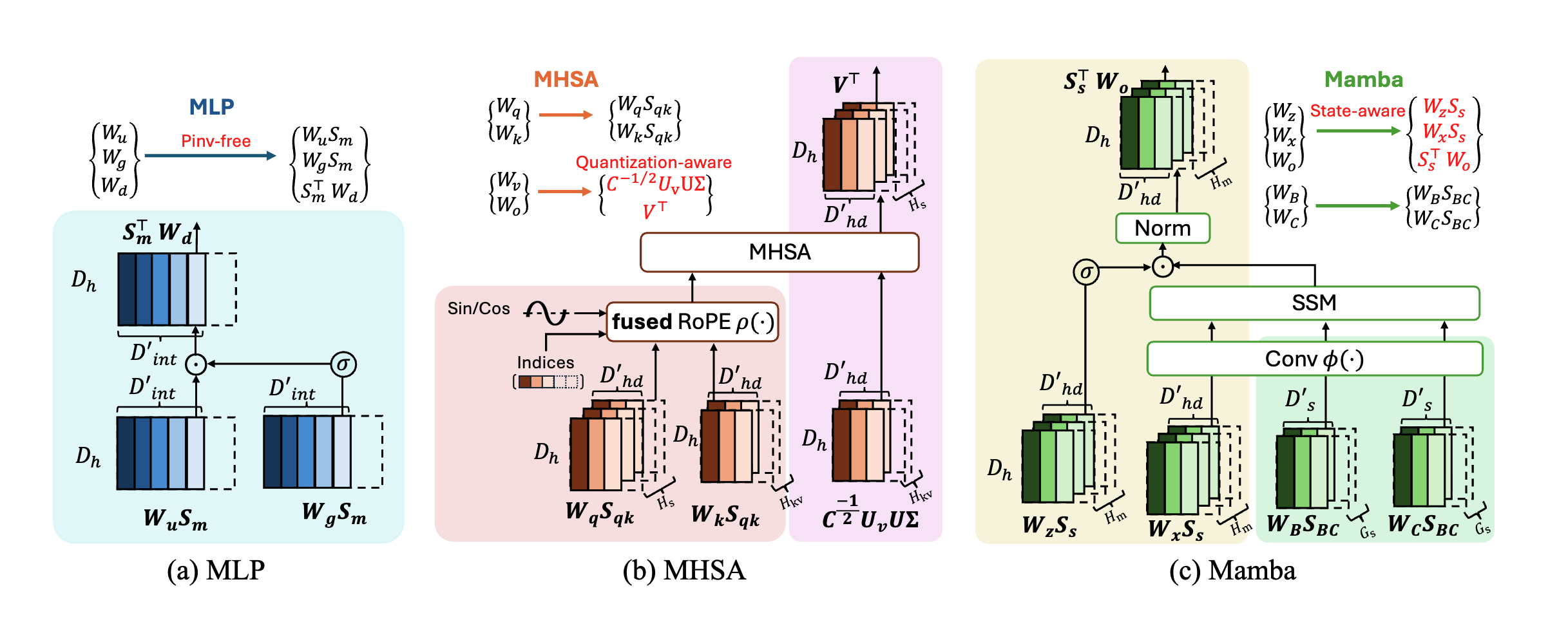

Supporting for Transformer and Mamba blocks

- Joint weight decomposition. (The group of weights is shown in the same background color)

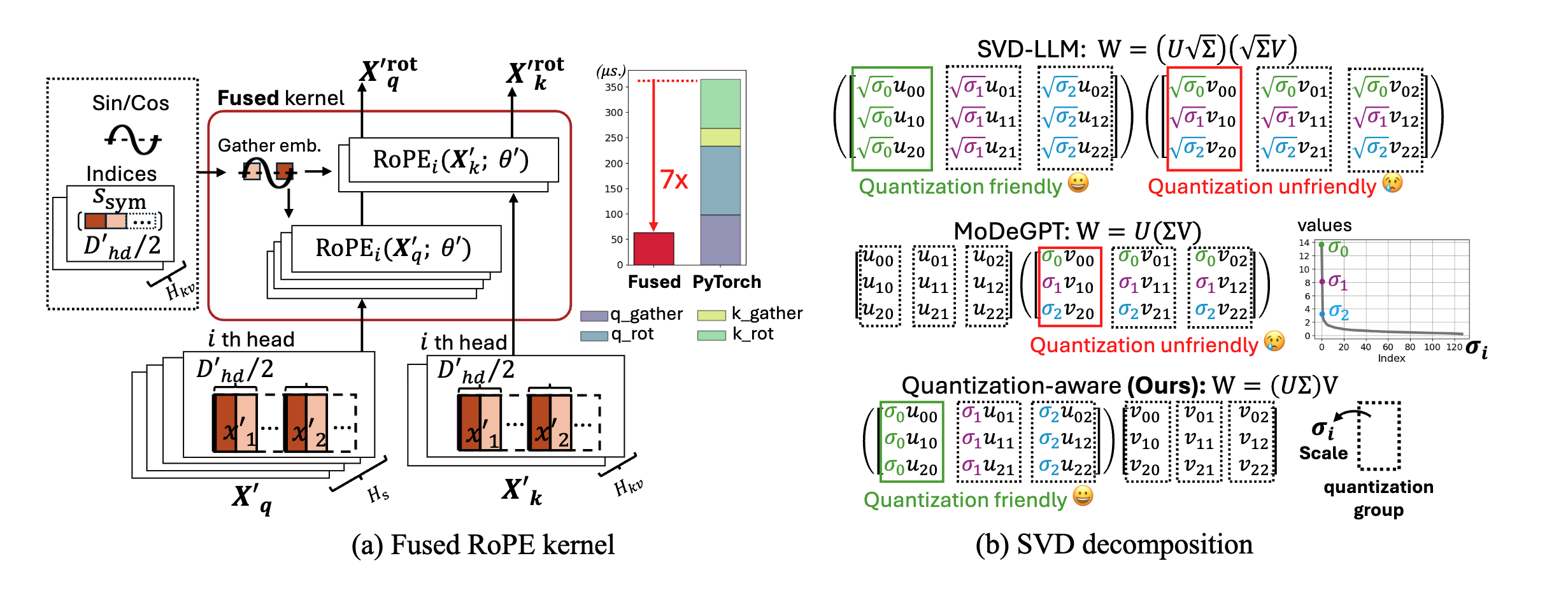

Joint design quantization and structured pruning

- Fused RoPE to support and accelerate pruned Q and K

- Quantization-aware SVD decomposition to reduce the quantization errors

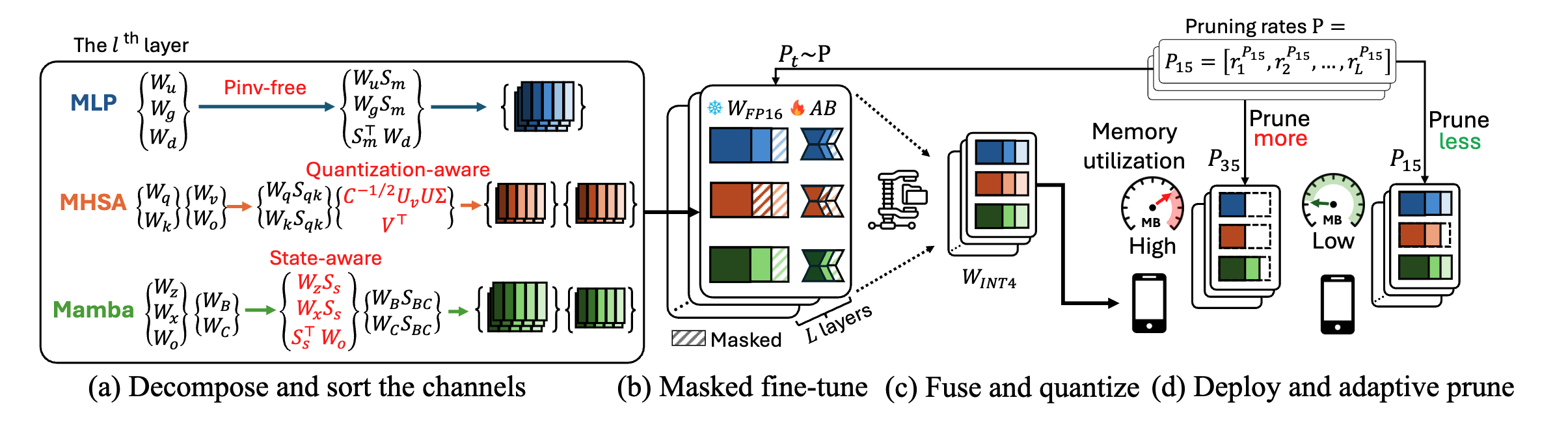

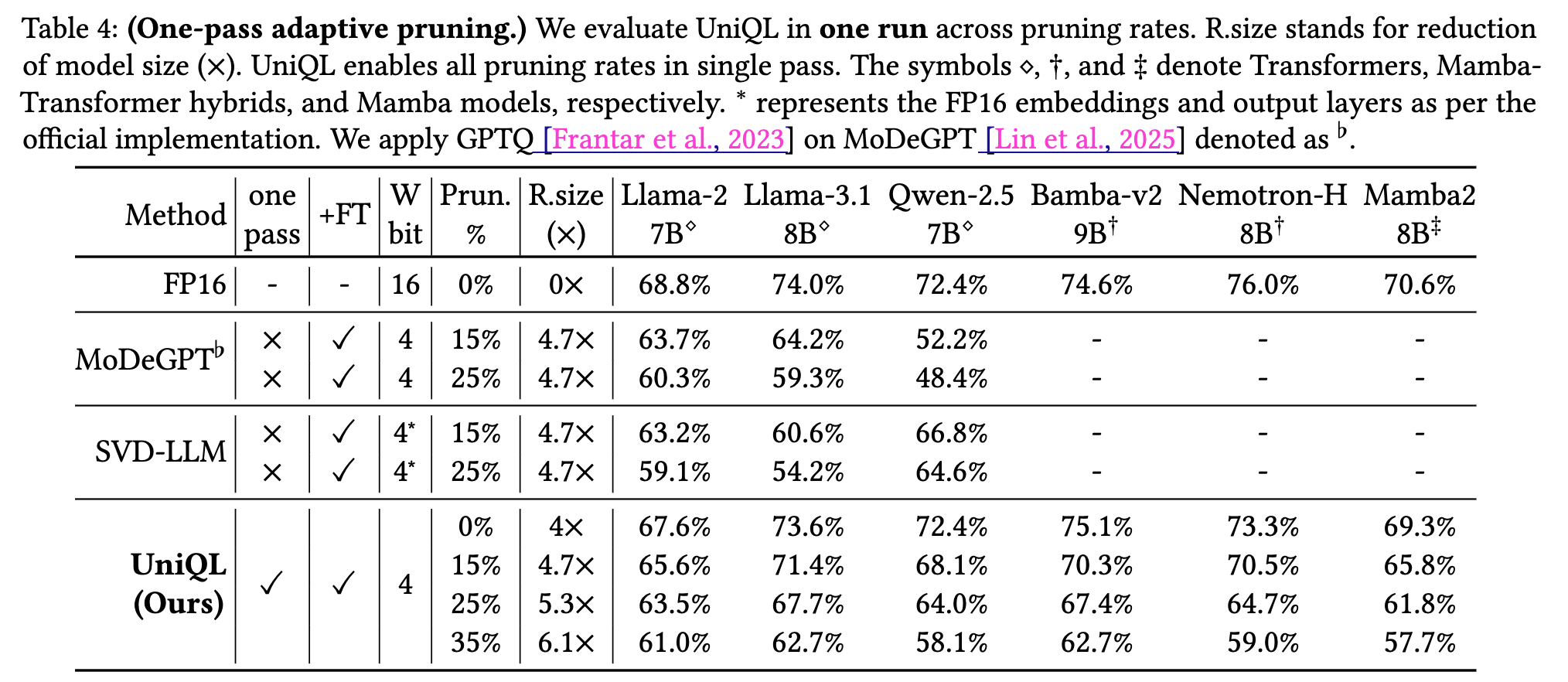

One-pass framework supporting all pruning rates

- (a) Pseudo-inverse-free, quantization-aware, and state-aware matrix decomposition methods for the grouped weights to obtain sorted weights

- (b) During fine-tuning, we sample global pruning rates, and masked out the weight channels

- (c) The refined patches are fused into the weights, followed by model quantization for deployment

- (d) Based on the system utilization, we perform on-device adaptive pruning of the quantized model.

Main results

Citation

@inproceedings{chiang2026uniql,

title={UniQL: Unified Quantization and Low-rank Compression for Adaptive Edge LLMs},

author={Chiang, Hung-Yueh and Chang, Chi-Chih and Lu, Yu-Chen and Lin, Chien-Yu and Wu, Kai-Chiang and Abdelfattah, Mohamed S. and Marculescu, Diana},

booktitle={International Conference on Learning Representations (ICLR)},

year={2026},

}

Acknowledgements

This work was supported in part by the ONR Minerva program, NSF CCF Grant No. 2107085, iMAGiNE - the Intelligent Machine Engineering Consortium at UT Austin, UT Cockrell School of Engineering Doctoral Fellowships, NSF CAREER Grant No. 2339084, Nvidia research gift, and Taiwan’s NSTC Grant No. 111-2221-E-A49-148-MY3.